Test Results

Results Viewer

Testing Farm uses a simple results viewer for viewing test results via it’s Oculus component.

It provides a unified interfaces for viewing results among all users of Testing Farm.

The results viewer is the index.html of the Artifacts Storage.

The code is contributable in case you would like to introduce some improvements.

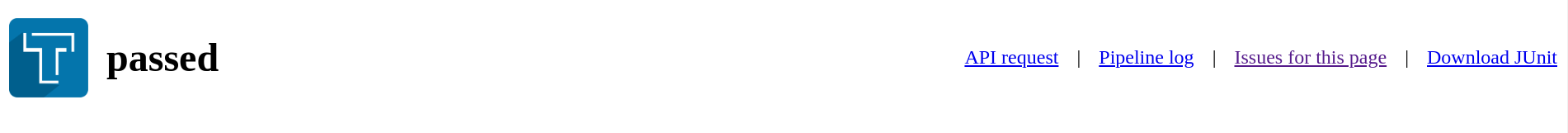

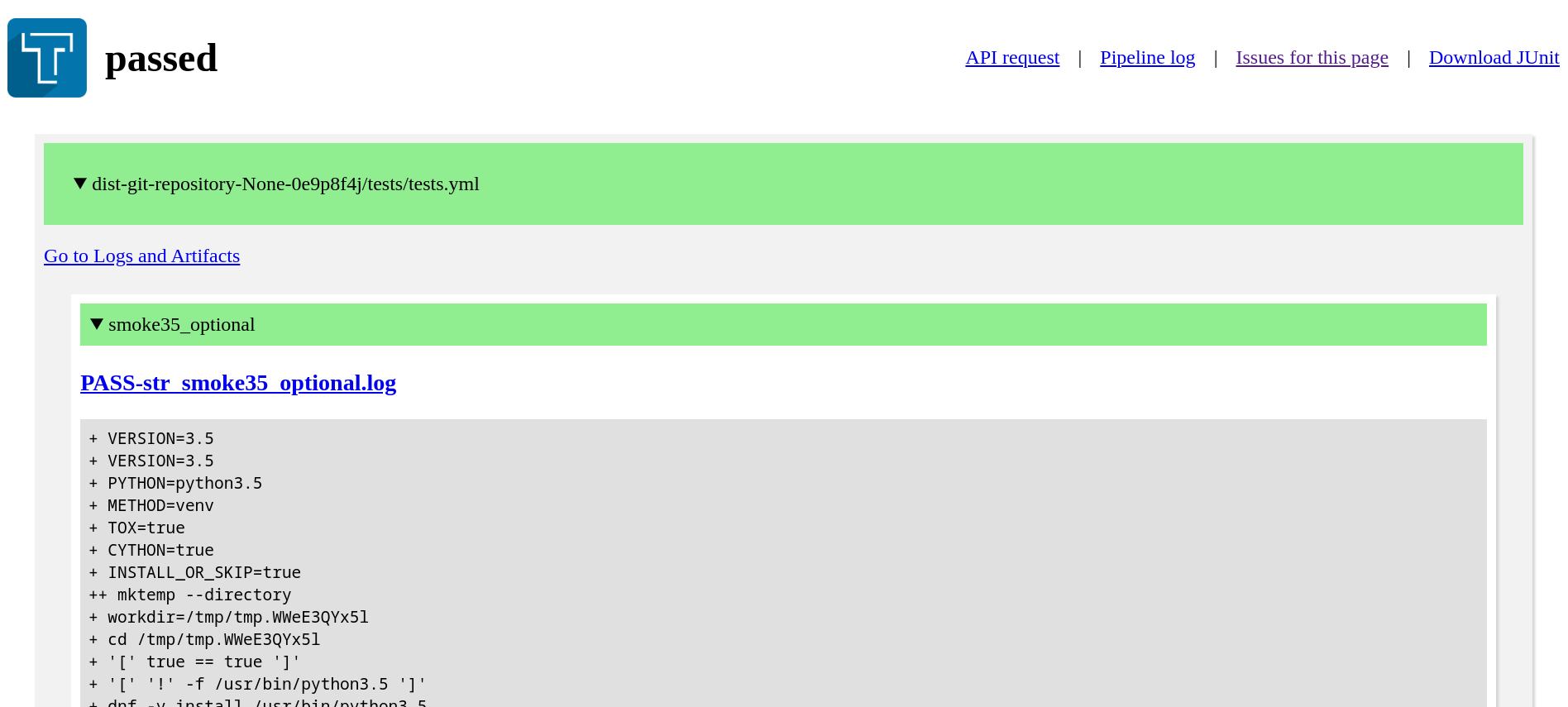

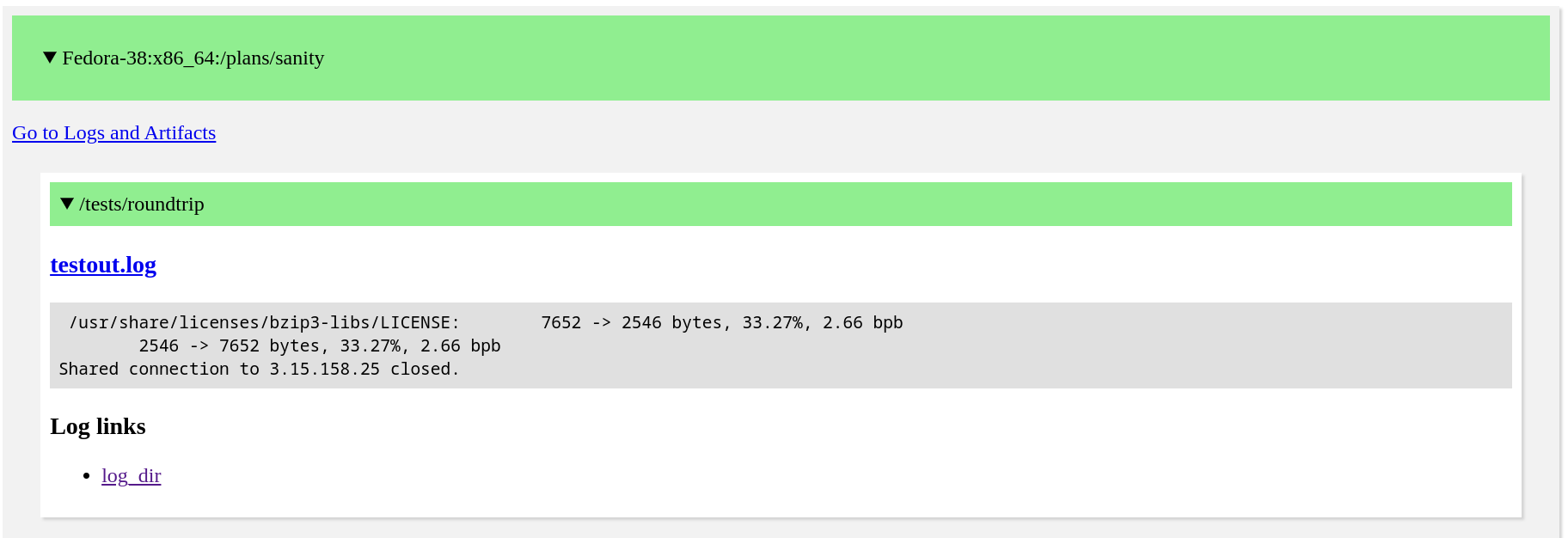

Tests Passed

If all tests passed, everything is collapsed to provide a nice view of all executed tests (or plans).

Here is an example of a run with all passed results:

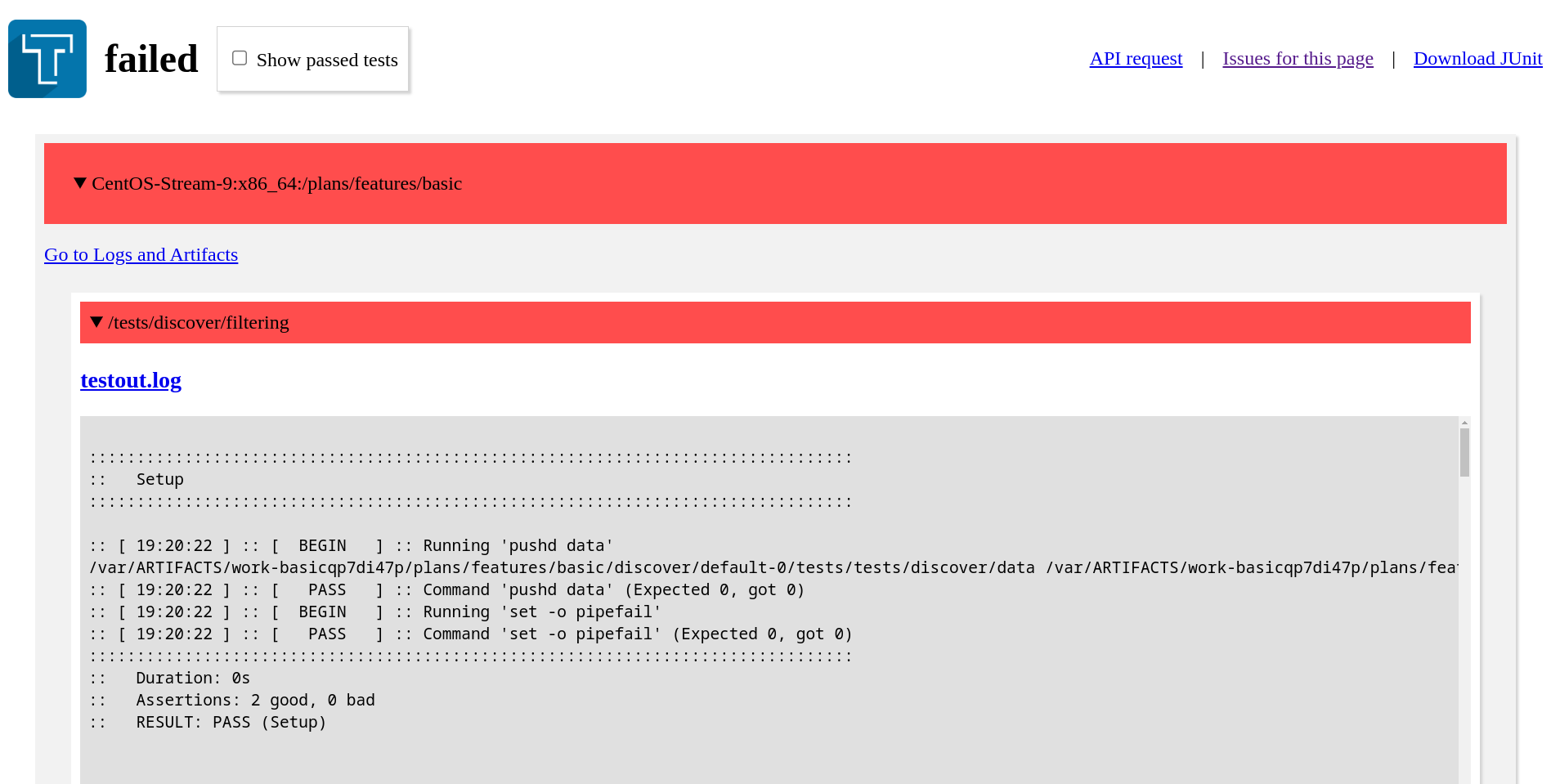

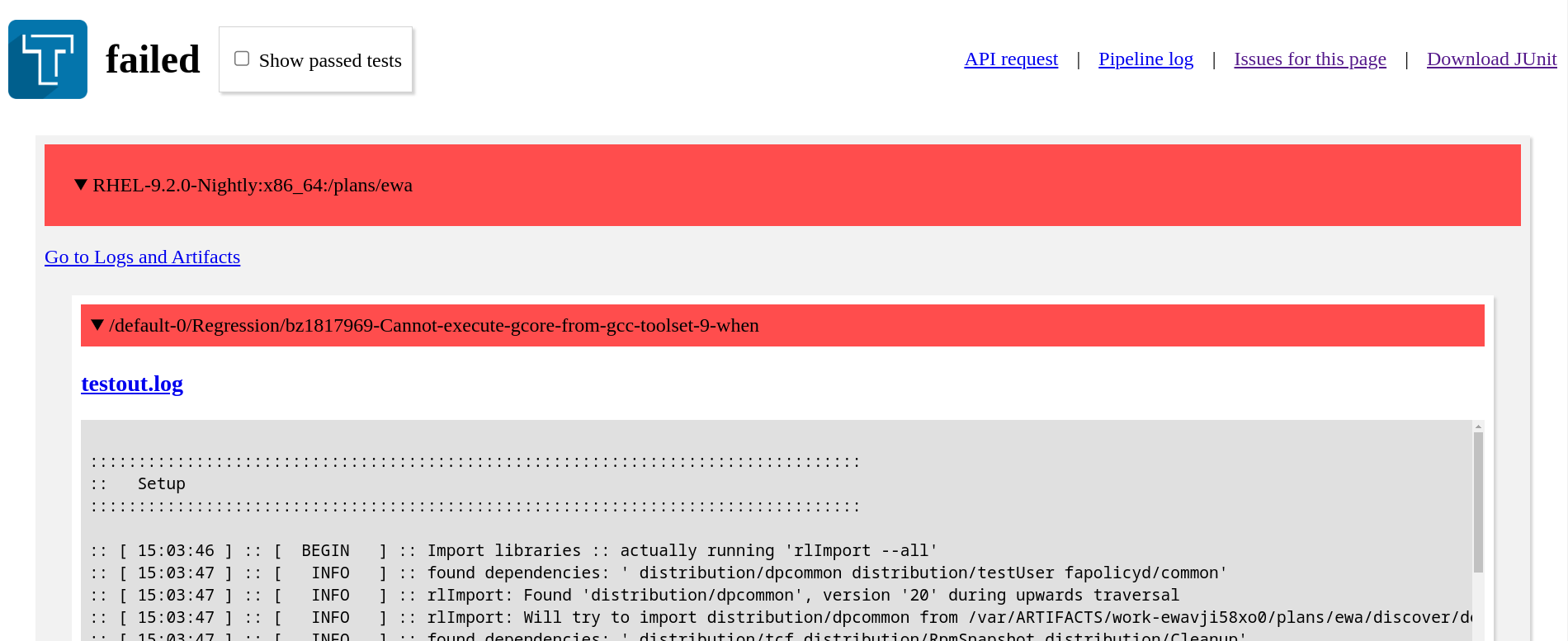

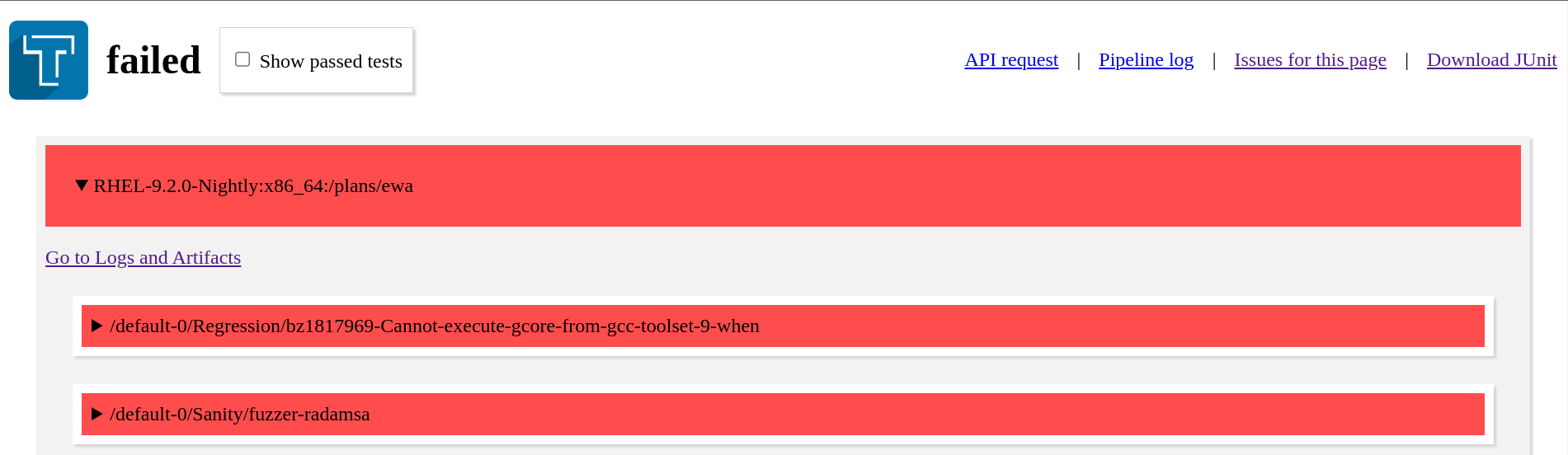

Tests Failed

When some of the tests failed, the viewer shows only expanded failed tests.

All the passed tests are by default hidden, to view them, click on the Show passed tests checkbox in the header.

Header

The results viewer header provides some useful links.

-

API request

A link to the API request details. It provides additional information about the request.

The JSON is not pretty printed, it is advised to use a JSON formatter to display it nicely, in case your browser does not support it out-of-box.

-

Pipeline log

This is the output of the Testing Farm Worker, in cases of errors it can provide more context about the issues hit in the pipeline.

-

Issues for this page

A link to the viewer’s public issue tracker.

-

Download JUnit

Testing Farm provides a standard JUnit XML which can be downloaded by clicking on the link. You can use this file for importing results to other result viewers.

Anatomy of the Test Results

|

See Test Process page to understand more about how Testing Farm executes the tests. |

In general, results are displayed as a list of one or more plans, where each of these plans has one or more tests.

|

In case of |

├── plan1

│ ├── test1

│ ├── test2

│ └── test3

├── plan2

│ ├── test1

│ ├── test2

│ └── test3

...Test Execution Logs

The test execution logs are the outputs of executed tests. They are previewed directly in the result viewer. There is also a link to access the test output file.

-

for

tmttests, the link to them is calledtestout.logand it points to the output of the tmt test execution.

-

for

STItests the link has a.logsuffix, and it called according to the generated test name, it points to the captured test output.

At the bottom of the test execution logs additional links are provided.

These currently contain at least one item - log_dir:

-

for

tmttests this is the link to the tests execution data directory -

for

STItests this points to the working of the whole execution, i.e. it is the same for all tests

In some cases the log links can provide additional logs, see for example logs from the rpminspect test:

Additional Logs and Artifacts

Additional logs and artifacts are provided for each tmt plan or STI playbook.

You can use the link Go to Logs and Artifacts to quickly scroll down to them.

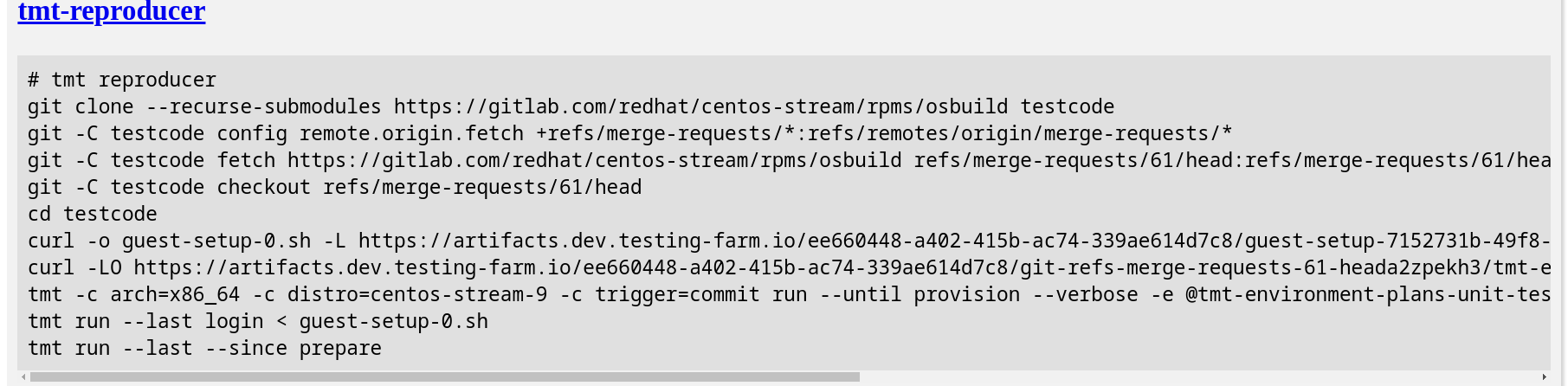

tmt-reproducer

See Reproducer for details.

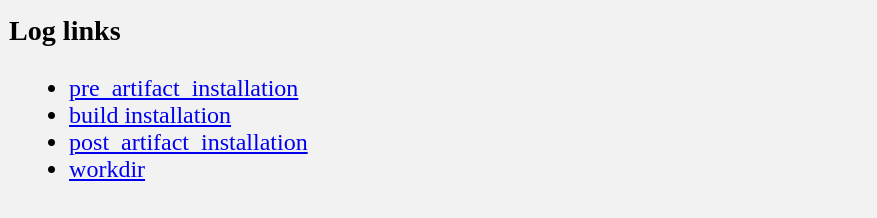

Log links

Currently logs from the test environment preparation are included:

-

pre_artifact_installation- logs from the pre-artifact installation sub-phase -

artifact installation- logs from the artifact installation sub-phase -

post_artifact_installation- logs from the post-artifact installation sub-phase

For tmt tests, also a link to the working directly with all tmt logs is provided:

-

workdir- you can find here for example the fulltmtlog -log.txt

Console Log

Serial console of the provisioned machine is available under console.log.

The content of the file can be incomplete, depending on the underlying infrastructure where the tests run.

Some infrastructures limit the maximum size of the console log.

Console log snapshots can be found in the workdir, in case the console log would be incomplete.

Console Support by Cloud Provider

Console support varies between cloud providers. If your tests rely heavily on console output for debugging, consider the infrastructure limitations below.

| Provider | Console Support | Notes |

|---|---|---|

AWS |

Limited |

Serial console output may experience significant lag and can be incomplete. |

OpenStack (PSI) |

Good |

Console output is generally reliable and complete. Recommended for tests that depend on console logs. |

Beaker |

Good |

Console output is generally reliable and complete. Recommended for tests that depend on console logs. |

IBM Cloud |

Limited |

Similar limitations to AWS. |

|

For the Red Hat Ranch, if you need reliable console output and your architecture supports it, consider requesting provisioning on Beaker or OpenStack instead of AWS or IBM Cloud. |

Reproducer

For tmt tests a code snippet is provided for reproducing test execution on your localhost.

|

The reproducer is not available for |

|

The reproducer steps for Red Hat ranch are incorrect and they need manual adjustments for the You can use the:

|

You can use this snippet to:

-

clone the same test repository that was used for testing

-

install tested artifacts before running the tests

-

run testing on your localhost against a similar environment that was used in CI

If applicable, the artifacts installation is optional in the Testing Farm request.

The tmt-reproducer is a work in progress, and currently has these limitations:

-

the pre-artifact installation and post-artifact installation sub-phases are not included

-

the test environment is not the same as in Testing Farm, where the tests run against an AWS EC2 instance (or other infrastructures)

-

the compose version might not be the same

Even if these limitations currently exist, we believe the reproducer is very handy for debugging test failures, and it is advised to use it in case of problems.

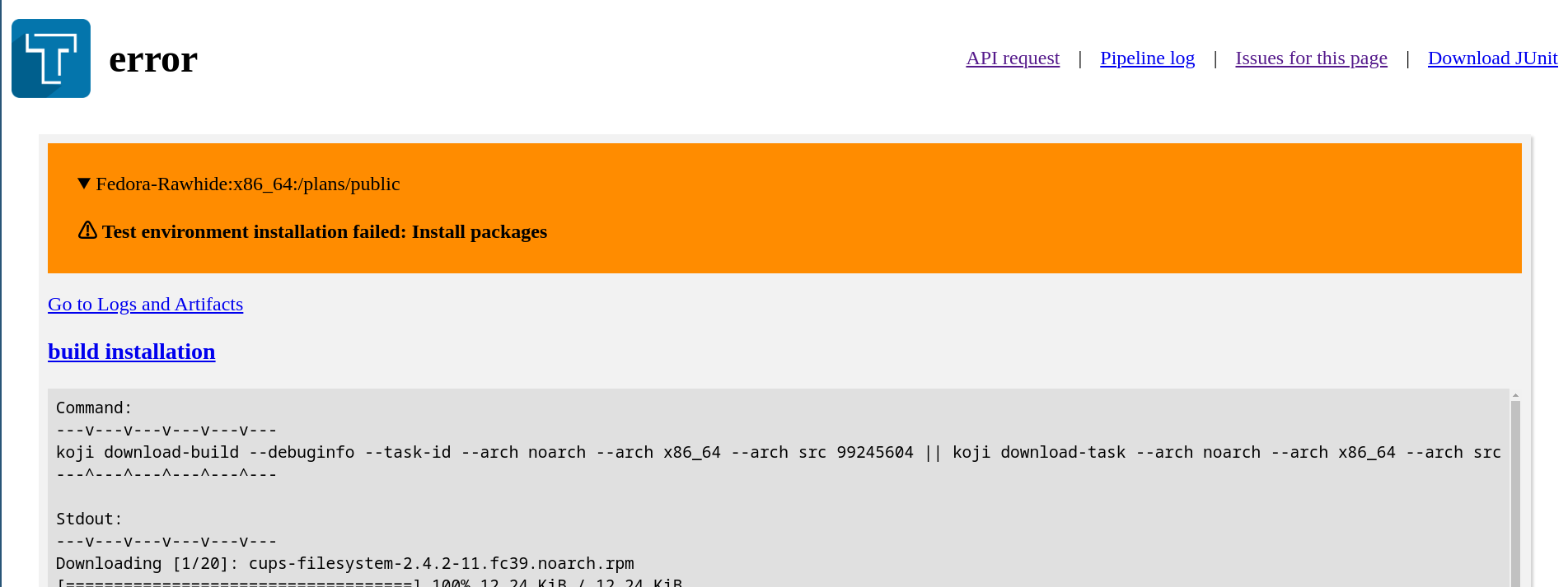

Errors

This section gives you some hints for investigating common errors you can encounter with Testing Farm.

When the request errors out, the viewer header shows error.

All plans which failed with an error are marked with an orange color.

Errors can happen in various stages of the Test Process. Usually, a reasonable error is shown in the plan summary, that should give you a hint what went wrong.

Provisioning

If Testing Farm is not able to provision resources required to run the tests, the test environment execution will fail.

This can have multiple causes:

-

You used an invalid compose or architecture, see list of composes and supported architectures

-

There is an outage, see Testing Farm Status Page

-

There is a bug, contact us

Test Environment Preparation

If Testing Farm is not able to prepare the test environment, the test environment execution will fail. There is no point to continue in the testing process if test environment is not properly prepared.

Artifacts Installation Failed

This can happen if all artifacts cannot be installed because of conflicts, missing dependencies, etc. The viewer shows the logs from the artifact installation, which should help you identifying the problem.

In case your artifacts have conflicting packages, you can use the exclude in install plugin of the prepare step.

See the tmt documentation for details.

|

The excluding works only for |

|

Testing Farm installs all rpms from all artifacts given. This can cause issues when multiple builds are tested together, for example with certain Bodhi updates. We plan to fix this in the next releases. See this issue for details. |

Ansible Playbook Fails

In certain cases, the playbooks run in the pre-artifact-installation and post-artifact-installation sub-phases can fail. This usually happens in case of outage with mirrors, or other connection problems. Try to restart the testing and if the situation persists, contact us.

Testing Stuck

If the testing is stuck in progress after the pipeline timeout has passed, it usually means a bug in Testing Farm. Please file an issue.